MongoDB’s aggregate command (and its predecessor find) take some time to get used to.

This is seen as a barrier to entry for those coming from a relational background as well as those who are early in the journey of MongoDB adoption.

With all the new AI and awesome natural language tools, can we get some help for generating queries? “Yes!” says the Compass team.

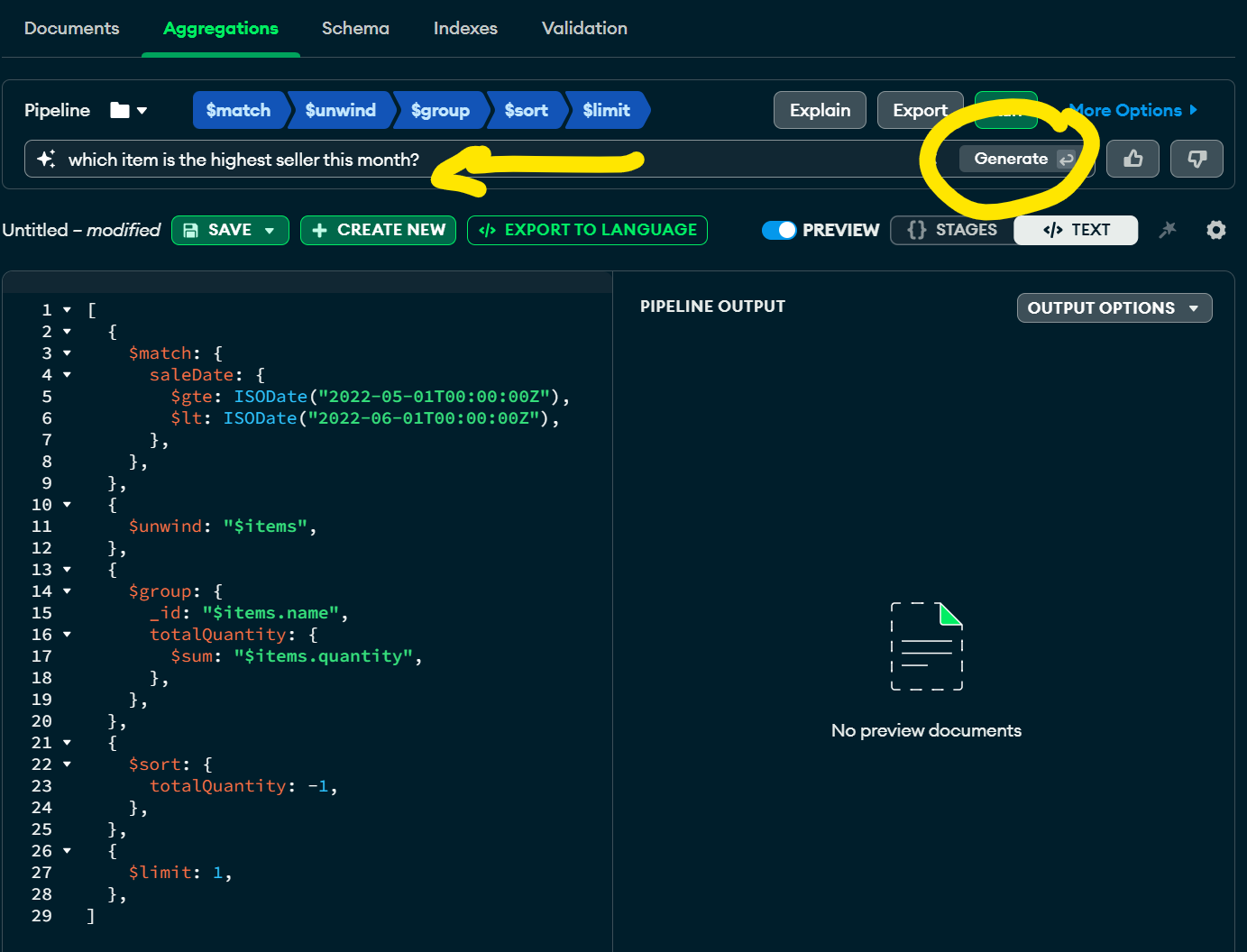

Announced at the .Local event in London, Compass is rolling out a natural language text prompt which translates your natural language input into a properly stated query using either the find syntax or an aggregation pipeline (MQL).

I ran some experiments to kick the tiers on this feature.

Taking a Test Run

The following are prompts and results I ran against a collection which has documents like the one below. Documents have different field values. The fields in the sub-document contact are sparse: a document may have one, two, or all of the fields cell, email, and fax. These fields - if missing - are absent (not assigned null).

{ |

Prompt

How How many Elmers are there?

Generated Query

[ |

Success. The query generated answers the prompted text as intended. Note that the field name is nowhere in the prompt. The tool understood that we’re looking for Elmers (a proper name, plural) and figured it should look in the field name. Nice!

Prompt

What are the 3 most common names of prospects with the status “customer” who have an email in the system?

Generated Query

[ |

Correct. The AI understood the AND filter for documents with the status set to “customer” and email existing.

It used the common trope for “top N by V”, which groups by the value of the field (name) and produces a count per grouping, then $sorts by the count descending, then $limits to the intended number.

I noted that the generated queries use the old-school {$sum: 1} trick to produce the count. Understandable since Compass may be used on older MongoDB version that lacked the $count group accumulator operator, but this is a bit more cryptic for the untrained when reading the resultant pipeline.

Let’s try some more:

Prompt

Which customers have and odd id number, and a name that starts with D?

Generated Query

[ |

Nicely interpreted. The $mod operator is a bit obscure, but fits the bill. The name is compared with a regex match on the expression /^D/. Left-anchored regex matches are the lesser evil of non-anchored matches, and can use indexes. But maybe a range expression such as {name: {$gte: "D", $lt: "E"}} would perform just as well?

I decided to change one little thing in the query: change from an upper case D (which is by the way how my data is - names are capitalized in the dataset) to a lower case d.

Prompt

Which customers have and odd id number, and a name that starts with d?

Generated Query

[ |

Interestingly, the tool decided to add a $project stage to the pipeline. Re-reading the prompt, I can see that I under specified which fields to return. But what caused the previous query to not $project at all and this one to do so is a mystery to me. Not strictly wrong, but a mystery nonetheless.

A fix to this is to explicitly specify what to show.

The prompt Which customers have and odd id number, and a name that starts with d? Give me their name labeled "Customer Name" and their id labeled "Customer Id" only. indeed generated the expected pipeline.

[ |

The projection renames the fields as the prompt described.

My standing conclusion is to be as explicit and concise as possible with the prompt. I guess this should be expected.

Prompt

How likely are people to have both a cell phone and a fax number?

Generated Query

[ |

Chalk this one to “not correct”. The prompt intent was to generate a ratio or number representing the chance or likelihood of a document to contain both fields. The generator understood the and condition correctly in the $match stage. But it failed to produce an aggregation which compares the count of documents matching the criteria vs. those which do not.

Non-English

Many people speak English as a second language. But many do not. How about typing in your native tongue?

I gave it a whirl with Hebrew. Not remotely a popular language, so I can’t imaging the team built specific support for it.

This query asks to find those who are named “יוסי“.

Prompt

מצא לי מי ששמם יוסי

Generated Query

{"name": "יוסי"} |

The generator correctly figured out the pluralized word for “name” in my query, and determined the field to search against is name. It understood the value sought was “יוסי“, and kept the text as is - again, correct. It did not try and translate the value into english (Yossi), but that’s a good thing. I would expect that if I knew the name was in english, i’d maybe type a hybrid question such as

Prompt

מצא לי מי ששמם Elmer

Generated Query

{"name": "Elmer"} |

Compass did the needful and produced my expected query. A note here is that BiDi editing isn’t perfect… Hebrew is written left to right, and the textbox moved the word “Elmer” to the very “end” of the sentence from an English perspective, but a native Hebrew speaker would expect it to be on the very left, at the end from a left-to-right perspective. But the results were correct, and I won’t argue with “it worked.”

How about some aggregation? The Hebrew query asks how many people have an email but not a cell phone.

Prompt

לכמה אנשים יש דואל אבל אין סלולרי

Generated Query

[ |

Correct, again. The Hebrew prompt used the acronym term for “email” (דואל) and the common word for “mobile phone” or “cell phone” which is “cellular” (סלולרי). Not only did it match the terms to the fields cell and email, it also figured out correctly that the fields are in the sub-document contact and generated the dot-path to the fields.

Conclusion

I have reservations about using natural language to describe queries. For one, spoken language was not designed to be concise, and the range of misinterpretation that can be cause by using imprecise language as well as ambiguities within the language itself can yield wild results. I was surprised how well this new feature performed. Color me impressed. Not only has the generator created my intended queries, it picked up on nuances that I did not imagine it would.

Can there be test cases where it comes up with the wrong answer? Yeah, sure. But id performed remarkably well on what I gave it, and in doing so proved it is suitable for a wide enough audience for a wide enough set of reporting and analysis needs.

Am I going to use this daily? Time will tell. For simple quests? Maybe. For more complex ones? Maybe also - as I can see it creating a skeleton query for me to approve or refine. Does this replace learning and knowing the breadth and depth of MQL - no. But for newcomers and occasional analysis this may just do the trick.

Give it a try, see what you think!